NewsGuard announced last week it’s using AI to automatically prevent American citizens from seeing information online that challenges government and corporate media claims about elections ahead of the 2024 voting season.

“[P]latforms and search engines” including Microsoft’s Bing use NewsGuard’s “ratings” to stop people from seeing disfavored information sources, information, and topics in their social media feeds and online searches. Now censorship is being deployed not only by humans but also by automated computer code, rapidly raising an Iron Curtain around internet speech.

Newsguard rates The Federalist as a “maximum” risk for publishing Democrat-disapproved information, even though The Federalist accurately reports major stories about which NewsGuard-approved outlets continually spread disinformation and misinformation. Those have already included the Russia-collusion hoax, the Brett Kavanaugh rape hoax, numerous Covid-19 narratives, the authenticity of Hunter Biden’s laptop, and the deadly 2020 George Floyd riots.

NewsGuard directs online ad dollars to corporate leftist outlets and away from independent, conservative outlets. The organization received federal funding for developing these internet censorship tools that now include artificial intelligence.

“The purpose of these taxpayer-funded projects is to develop artificial intelligence (AI)-powered censorship and propaganda tools that can be used by governments and Big Tech to shape public opinion by restricting certain viewpoints or promoting others,” says a recent congressional report about AI censorship. These “…projects threaten to help create a censorship regime that could significantly impede the fundamental First Amendment rights of millions of Americans, and potentially do so in a manner that is instantaneous and largely invisible to its victims.”

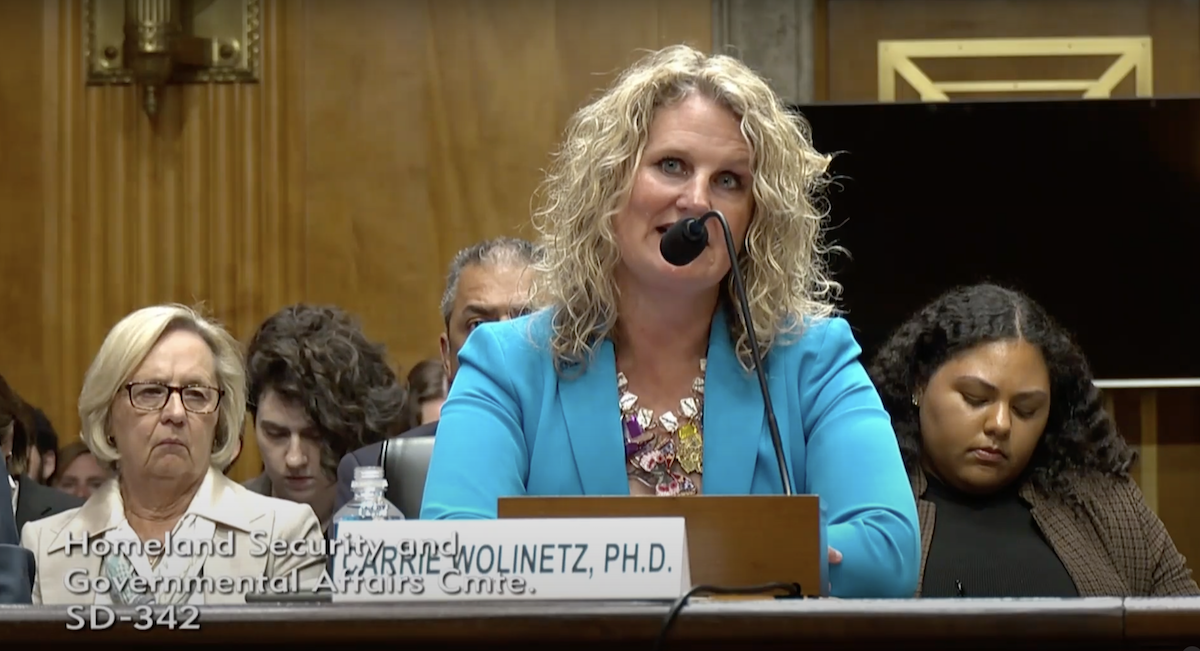

Numerous federal agencies are funding AI censorship tools, including the U.S. Department of State, the subject of a December lawsuit from The Federalist, The Daily Wire, and the state of Texas. The report last month from the House Subcommittee on the Weaponization of the Federal Government reveals shocking details about censorship tools funded by the National Science Foundation, one of hundreds of federal agencies.

It says NSF has tried to hide its activities from the elected lawmakers who technically control NSF’s budget, including planning to take five years to return open-records requests legally required to be returned within 20 to 60 days under normal circumstances. NSF and the projects it funded also targeted for censorship media organizations that reported critically on their use of taxpayer funds.

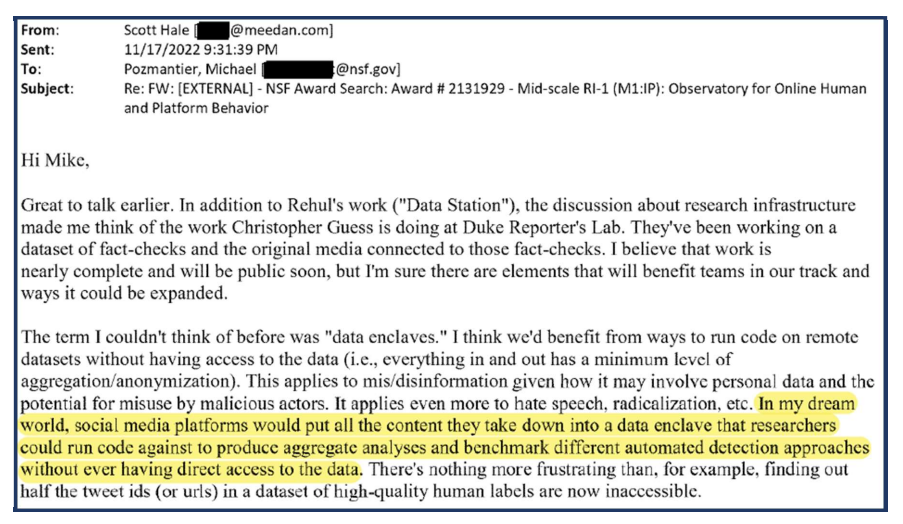

“In my dream world,” censorship technician Scott Hale told NSF grantmakers, people like him would use aggregate data of the speech censored on social media to develop “automated detection” algorithms that immediately censor banned speech online, without any further human involvement.

“Misinformation” that NSF-funded AI scrubs from the internet includes “undermining trust in mainstream media,” the House report says. It also works to censor election and vaccine information the government doesn’t like. One censorship tool taxpayers funded through the NSF “sought to help train the children of military families to help influence the beliefs of military families,” a demographic traditionally more skeptical of Democrat rule.

Federal agencies use nonprofits they fund as cutouts to avoid constitutional restraints that prohibit governments from censoring even false speech. As Foundation for Freedom Online’s Director Mike Benz told Tucker Carlson and journalist Jan Jekielek in recent interviews, U.S. intelligence agencies are highly involved in censorship, using it essentially to control the U.S. government by controlling public opinion. A lawsuit at the Supreme Court, Murthy v. Missouri, could restrict federal involvement in some of these censorship efforts.

Yet, as Benz noted, corporate media have long functioned as a propaganda mouthpiece for U.S. spy agencies. That relationship has continued as social media displaced legacy media in controlling public opinion. Today, dozens of highly placed Big Tech staff are current or former U.S. spy agency employees. Many of them manage Big Tech’s censorship efforts in conjunction with federal agency employees.

Nonprofit censorship cutouts use “tiplines” to target speech even on private messaging apps like WhatsApp. AI tools “facilitate the censorship of speech online at a speed and in a manner that human censors are not capable,” the House report notes. A University of Wisconsin censorship tool the federal government funded lets censors see if their targets for information manipulation are getting their messages and gauge in real-time how their targets respond.

A Massachusetts Institute of Technology team the federal government funded to develop AI censorship tools described conservatives, minorities, residents of rural areas, “older adults,” and veterans as “uniquely incapable of assessing the veracity of content online,” says the House report.

People dedicated to sacred texts and American documents such as “the Bible or the Constitution,” the MIT team said, were more susceptible to “disinformation” because they “often focused on reading a wide array of primary sources, and performing their own synthesis.” Such citizens “adhered to deeper narratives that might make them suspicious of any intervention that privileges mainstream sources or recognized experts.”

“Because interviewees distrusted both journalists and academics, they drew on this practice [of reading primary sources] to fact check how media outlets reported the news,” MIT’s successful federal grant application said.

People who did this were less likely to believe the federal government’s propaganda, making them prime obstacles to government misinformation. Researchers are targeting people in these categories to figure out how to manipulate them into believing government narratives, emails and documents in the House report show.